7 - Log Linear and Neural LMs

ucla | CS 162 | 2024-02-06 12:24

Table of Contents

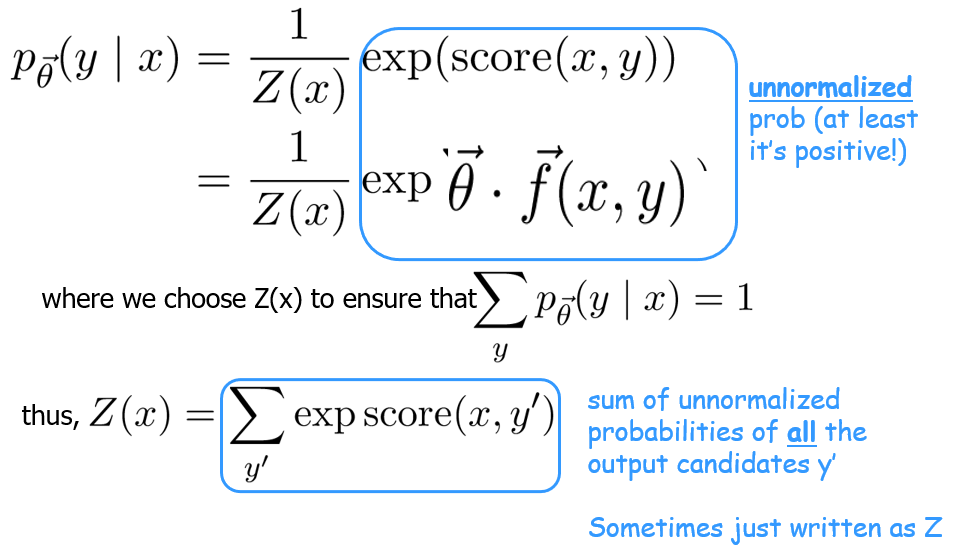

Log-Linear Language Models

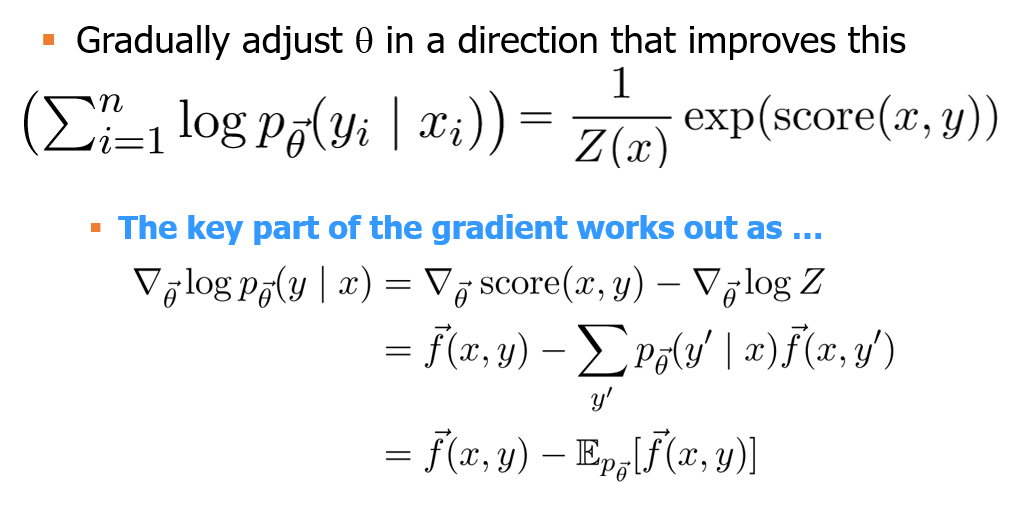

we want to create a conditional distribution $p(y x) - we can make the conditional probability distribution wrt the weights as

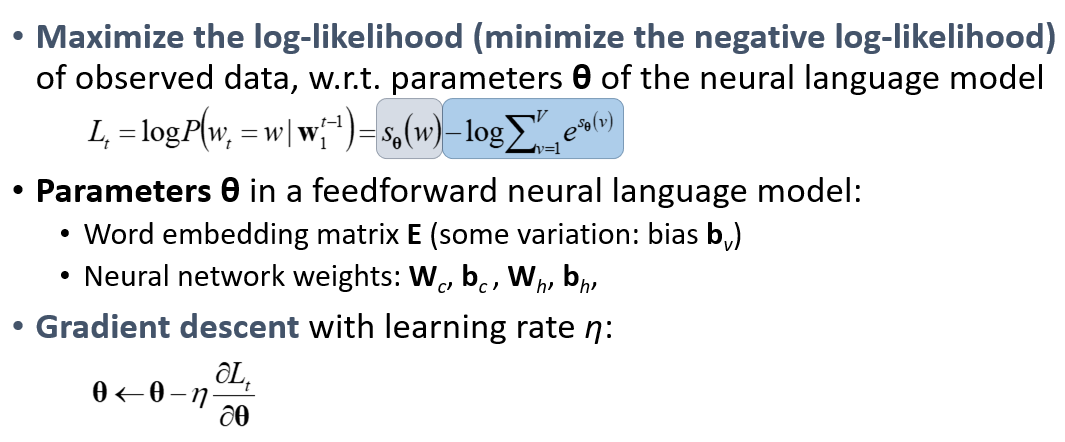

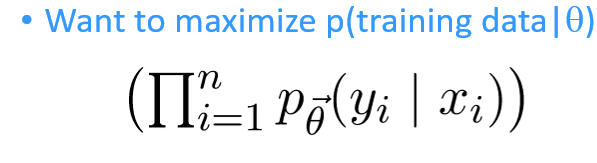

Training

given x_i)$$ - the thing we want to improve is make the prob dist approach the RHS

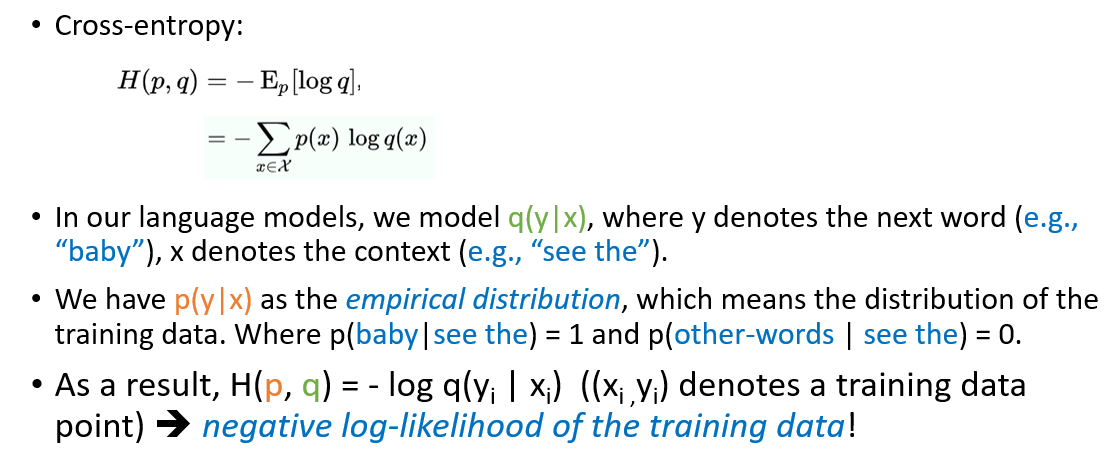

Cross Entropy

- same as neg log likelihood of our model

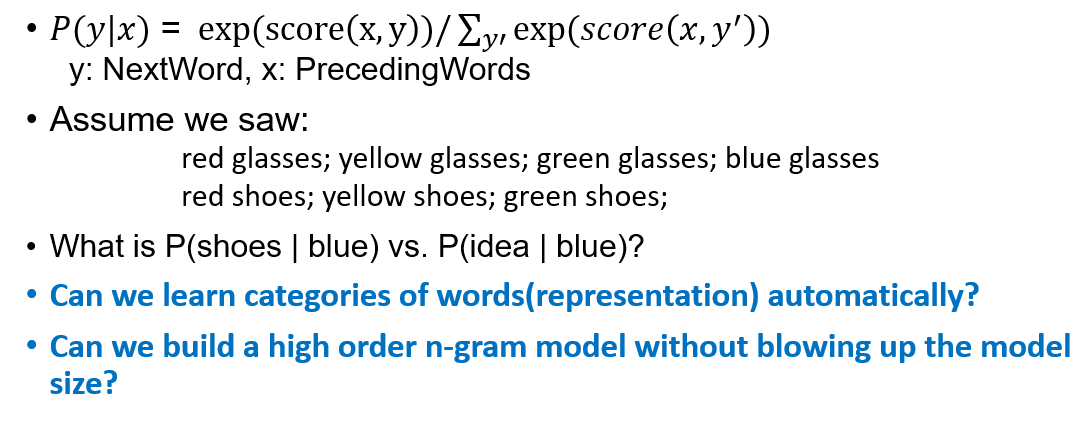

Generalization and OoD (unseen) samples

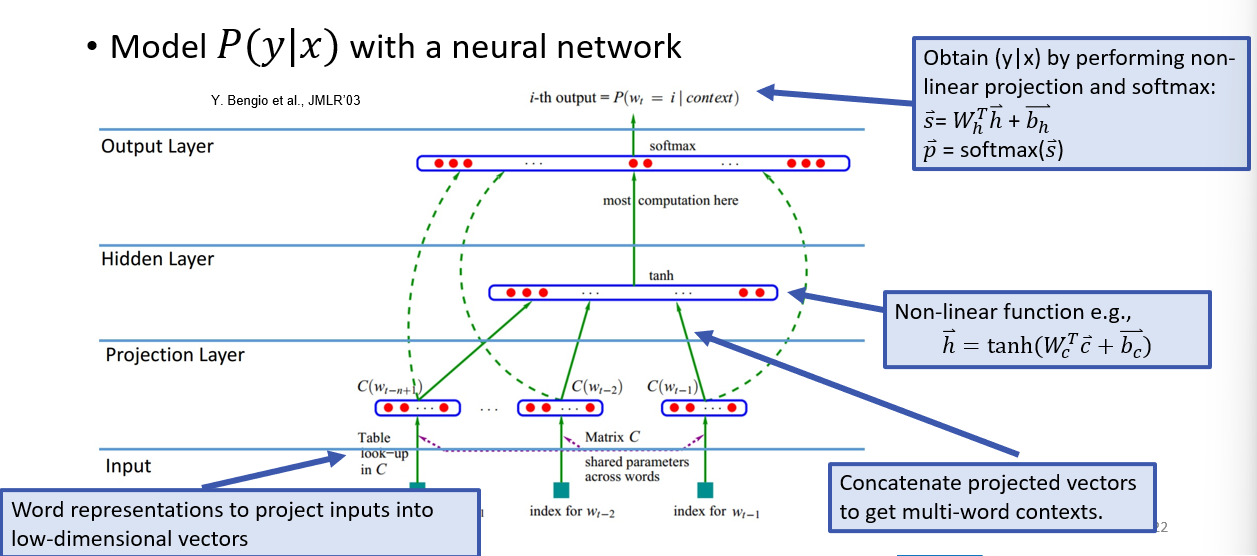

Neural LM

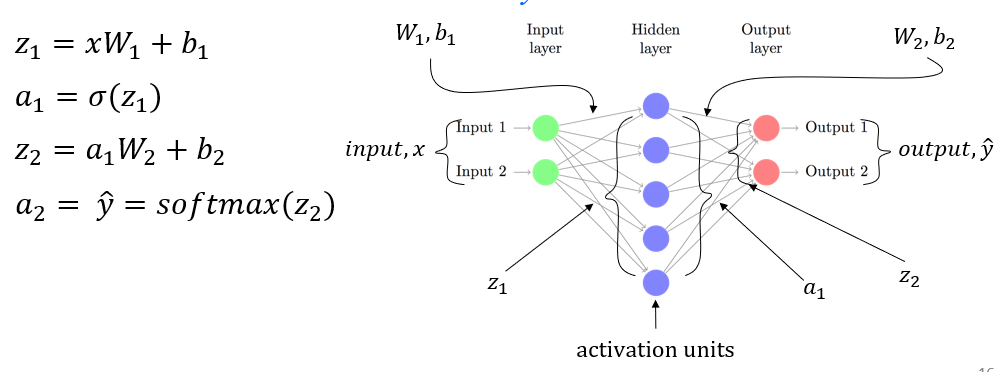

NN Review

- idea for NN for LMs - FFNN (3-MLP)

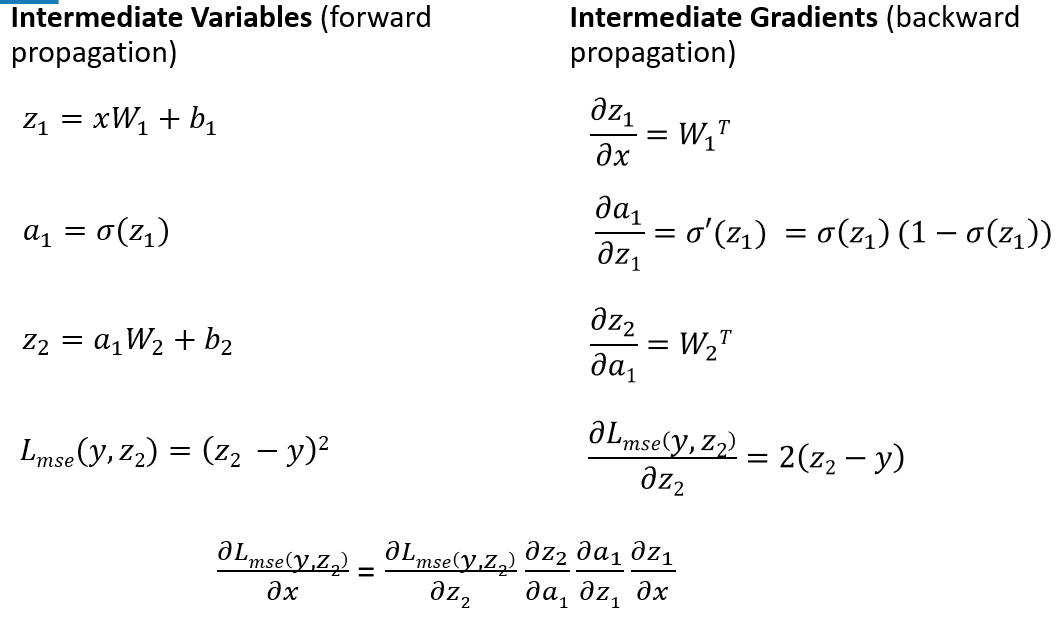

- forward pass

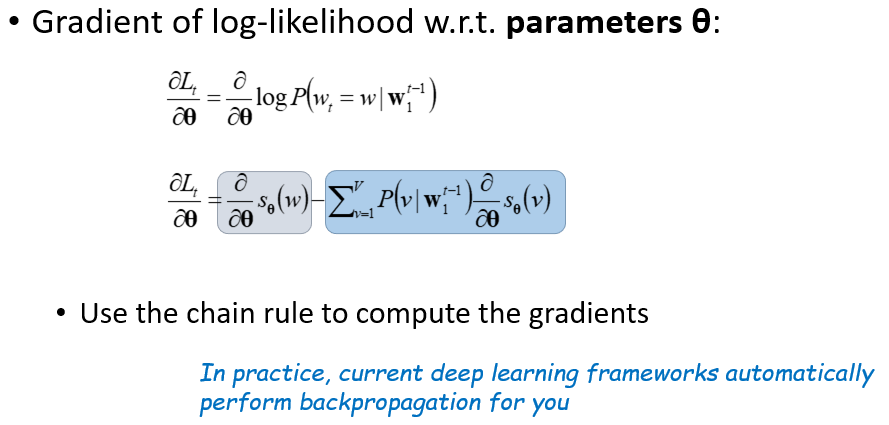

- backprop

- weight update

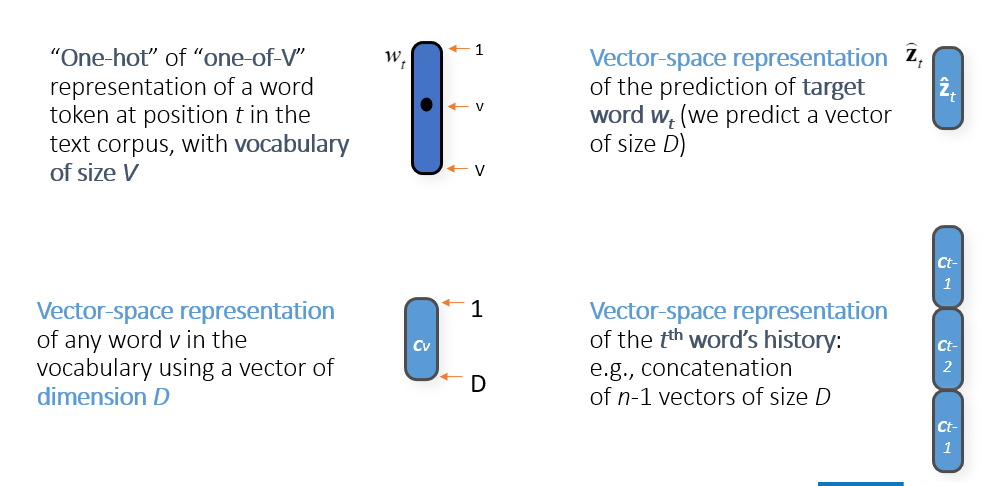

Word Embeddings

- map tokens to dense low-dim vecs to create prob dists over

- these vector representations allow similarity comparison and analogies

- we construct LMs in such a way to learn the model and representations i.e., update embeddings along with the weights

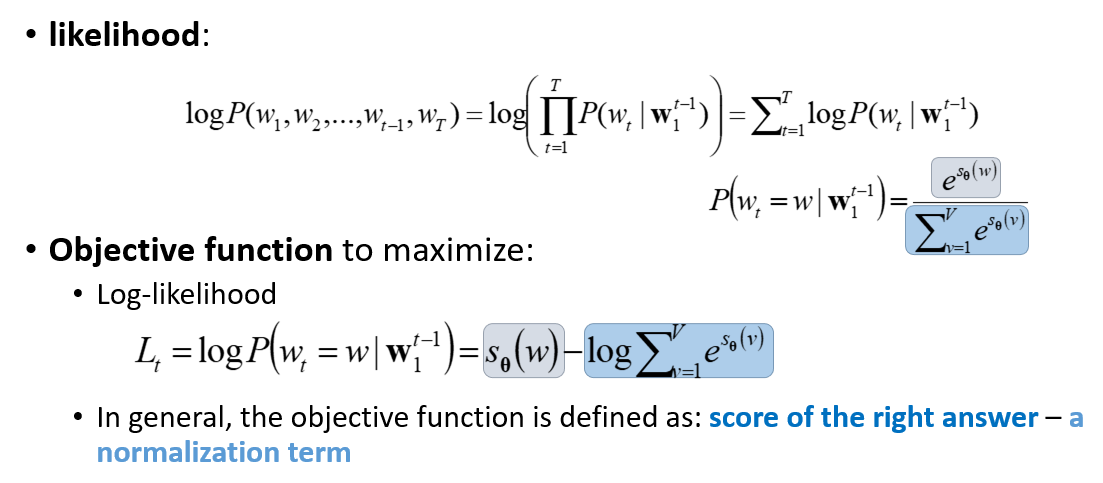

Objective Function

- likelihood is softmax, we want to maximize softmax